The Sunday Blog: Advanced programming

Files and databases

The Nextion Intelligent P-Series HMI displays become more and more popular because these have much more horsepower under the hood, are available in multiple sizes from 4.3″ to 10.1″, with a capacitive (more comfortable and multi-touch capable) or a resistive (more robust in rough RF environments) touch engine, and most of them can be ordered boxed in a hobbyist and maker friendly easy to mount housing with an integrated speaker.

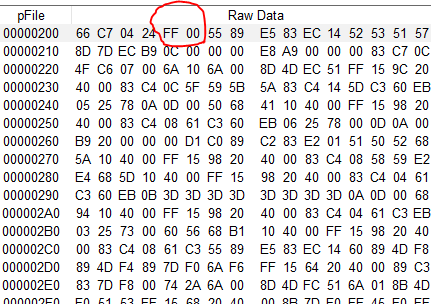

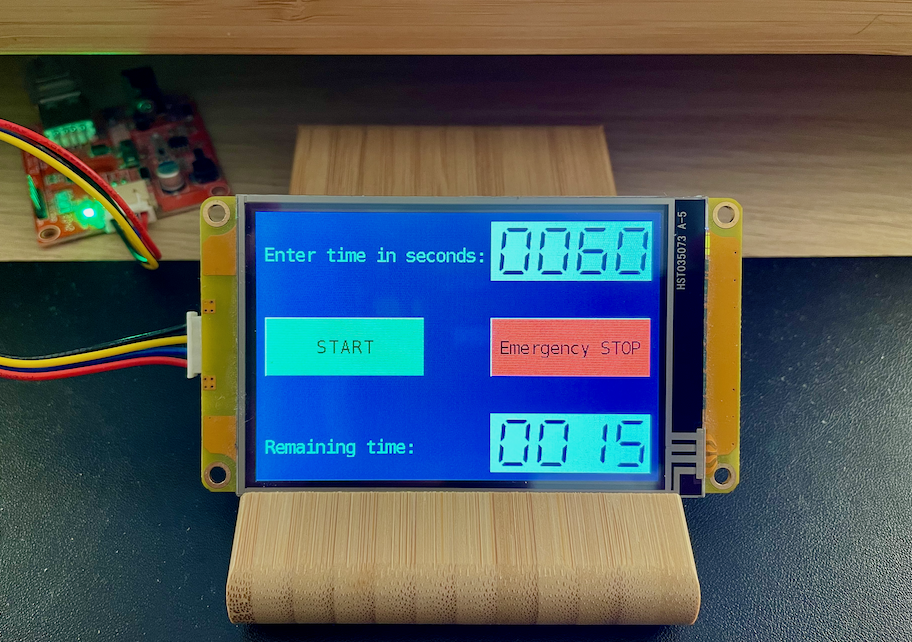

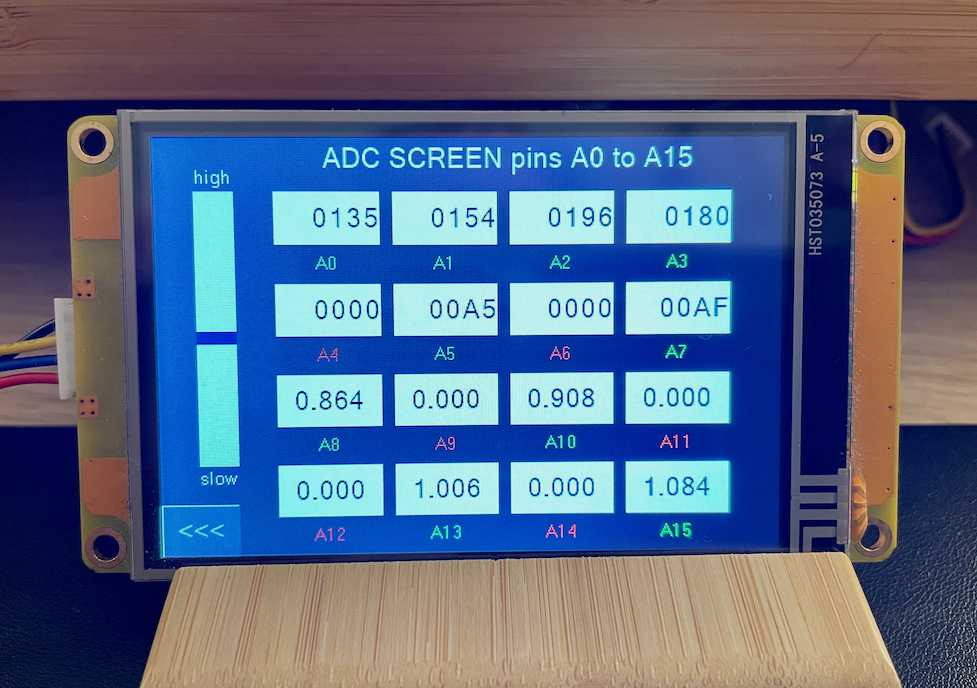

Due to the much more powerful integrated processor and the bigger memory, the Nextion firmware offers also additional components and functions. But up to now, these are not often mentioned in Nextion-related forums and groups. Thus, I decided to write about the FileStream component which allows random read/write access to files on the SD card or in a reserved area of the RAM. But this time I want to go beyond simple text and ini files like in the demo project in this article and discover together with my dear readers how database tables can be handled.

History and theory first!

In earlier times of the humanity, things were simple. The only way to exchange structured information was the spoken language. Single words were combined to phrases, phrases were combined to stories. Theoretically, words were separated by short breaks, and phrases by the intonation and/or breathing. Our context-sensitive and fault-tolerant brain allows in most cases to understand a text, even if spoken with wrong intonation or without word separation. Later, people started to write information down. With respect to the respective supports (stone, leather, copper, bronze, papyrus) and to improve readability, new information sub-entities like paragraphs and pages were introduced. Words became separated by blank spaces, sentences or sentence parts by interpunction, paragraphs by a blank line and/or indentation. This was an ongoing process. Early sources like the Qumran scrolls had not always clearly defined word spacing or interpunction which required much working time by many experts to transliterate the contents into modern written language conventions.

Over the time, and especially after Johannes Gutenberg invented the printing machine which allowed to distribute more information at lower cost, another phenomenon arose: the indexing. When books had more and more pages, it became difficult to find a specific part of it. Thus, books were divided into chapters and an index allowed to make the link between the beginning of a chapter and its page number. Still later, when dictionaries and other scientific publications were distributed, the indexing climbed to the next level: the keyword index was born. On a few pages at the end of the book, all important keywords were listed in alphabetical order, with all the page numbers where this keyword appeared aside.

Why did I tell you all that? Because it is essential to know the history of “information storage” to understand how nowadays’ databases are organized.

Data stored in electronic formats

When it comes to move away from paper and to store data in an electronic format, some of the above considerations remain important while others become irrelevant. Let’s look at a few example cases:

If a simple floating text is stored in electronic form, everything depends on the purpose: If it is just intended to be reproduced in a readable form, be it on a screen or as a printed hardcopy, the same rules apply as for the readability of printed text. Clear word separations, interpunction, paragraphs, and paging improve the readability but are not forcibly required. The human reader is fault tolerant and will still get the information, even if the text is badly structured or if there are typos. But if we want the machine to process the text, for example by building an index or a keyword index by itself after scanning the text file once, we have to make sure that all separators between words, sentences, paragraphs and pages are 100% ok, because the microprocessor is in most cases not able to do context-sensitive analysis and fault correction, especially not in small embedded systems.

Condensed data – tables

Often, and especially in order to easily sort, find, correlate, and aggregate data, the elementary information is not stored as floating text, but in table form. A table consists most times of a header row indicating the type of data found in the respective columns below, followed by 1 to n data rows, containing one field per column. Since data organized in tables is a format which is more optimized for electronic processing and readability is not the priority, paragraphs and pages are not required to store everything in structured form. But it is very important that the field and row separators are 100% error free. Imagine that a field separator is missing in a few rows. When looking up data in a specific column, our system would not find a result in the aforementioned erroneous rows because due to the missing separator, information has slipped 1 column to the left and is thus invisible.

Just for the sake of completeness, we should mention that field and row separators are not the only way to organize data in tables. One can also define fixed lengths (in bytes) per field, from which the row (or sometimes called record) length can be deduced. But that comes at a high cost: In each row, each field has to be dimensioned to hold a maximum of bytes, even if only one (or none) is needed in a specific row. That’s highly inefficient and bloats the file for nothing, which made that this format has almost been abandoned, today.

The first step from floating text to a table structure: The CSV file

A CSV file is basically a text file which contains structured table data. There is no unique standard, but there are a few common conventions which make the csv format relatively well interchangeable between different systems. In order to read and to process csv data, the consuming system needs a few informations:

1.) Does the first line contain header information (like field names) or data ?

2.) What is the row (or record) separator? The common standard is that records are separated by a new line control character. But, depending on the OS, this can be \n, \r, or even two characters: \r\n

3.) What is the field separator? Often, a comma, or a semicolon is used, but sometimes, one might find a vertical line character or a colon.

4.) What is the escape character? In some cases, a field or record separator may appear as part of field data. To avoid confusion and structural errors while interpreting the file, such characters have to be preceded by an escape character. This is in 99.9% of cases the backslash \.

5.) How can data which looks purely numeric be enforced to be interpreted as text? Again, in 99% of cases, by putting it into double quotes. If this is used, all double quotes which might be part of field data have to be preceded by the escape character.

Knowing these 5 parameters, we can now open and process a csv file. Fortunately, our P-Series HMI has another component, the DataRecord which allows to display structured data in table form. This, and the FileStream component form a powerful pair, which we’ll demonstrate in a future article. But to make optimal use of this, I think that it was important to go into all the above “dry” details for the best understanding and most profitable use.

Thank you for reading and happy nextioning!