The Sunday blog: HMI, cybernetics and the steam engine

Part 2: It becomes very complex

In the previous episode of this blog we have seen how the interaction between man and his work has changed over the course of humanity’s evolution. The invention of tools and machines has meant that man has no longer had direct physical contact with his work piece, and thus has not been able to feel and feel it. Also, the energy used became increasingly greater and could no longer be regulated “by hand”. New ways of interaction between man and machine were sought and found: Sensors, display instruments, controls.

Interaction with complex mechanical systems

However, these ways of interaction had to fulfil an important condition: The cybernetic control loop (see previous sequence) could not be interrupted. It was and still is necessary to display relevant information about the machine status in real time, if possible, so that the human being could make decisions that the machine is not able to do independently. Then man had to be able to communicate his decisions and requirements to the machine. At the same time, machines and systems were becoming increasingly complex, which meant that the simple control panel sometimes became an entire control room.

The computer

At the same time, a whole new generation of machines was created, which did not relieve man of physical or mechanical work as before, but were able to perform intellectual work, in particular complex calculations and logical decisions along predefined processes. The computer was born! Again, several cybernetic iterations were needed until man had found out how best to interact with it. Much experimentation was done, including punched cards, teletypewriters and large switchboards that had a control light and switch for each bit in each register.

Integrated process control

It soon became apparent that computers were also excellent for process control of large machines, especially now that graphic screens and ergonomic input devices such as the mouse were available. For example, it became possible to monitor and control 4 nuclear reactors from a relatively small room.

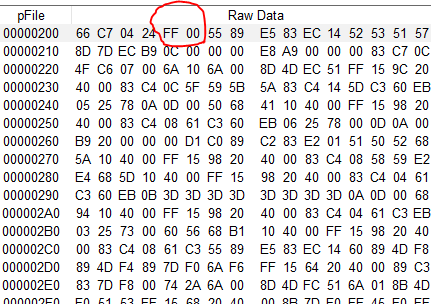

Embedded technologies

And so we arrived in the present on our time travel. In the meantime, extremely powerful computers can be found in every household, which are greatly oversized for simple tasks such as interacting with the weather station in the garden, monitoring the aquarium or controlling the dishwasher. This was the birth of the so-called embedded MCUs, which are significantly smaller, cheaper and more energy-efficient. Thanks to the Arduino platform they have also found their way into the hobby world. Now they were suddenly faced with another dilemma: simple LEDs or monochrome text LCDs with two or four lines of 20 characters each, as well as push buttons and switches directly connected to GPIO pins of the MCU could not keep up with the ergonomic requirements. On the other hand, many of the small embedded MCUs do not have the capacity to control a color display, as one is used to from a computer, in addition to their actual task. A small color LCD with 320 x 240 pixels, 16 bit color depth and a rather comfortable frame rate of 15fps needs 18.43Mbit/s just for the transmission of the pixels and a 153.6kB frame buffer to render the picture. This is unthinkable on an Arduino UNO with a clock frequency of 16MHz and 2kByte RAM!

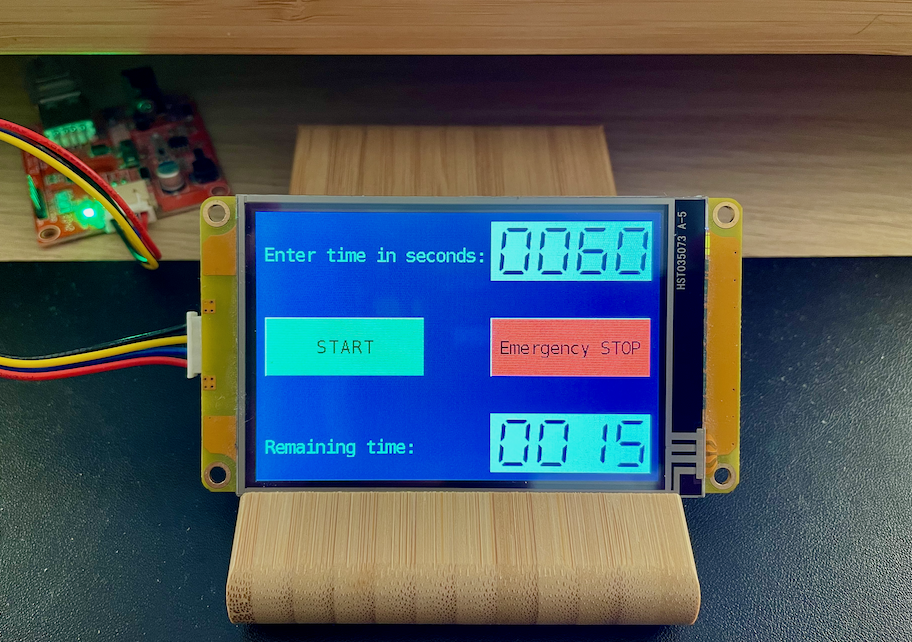

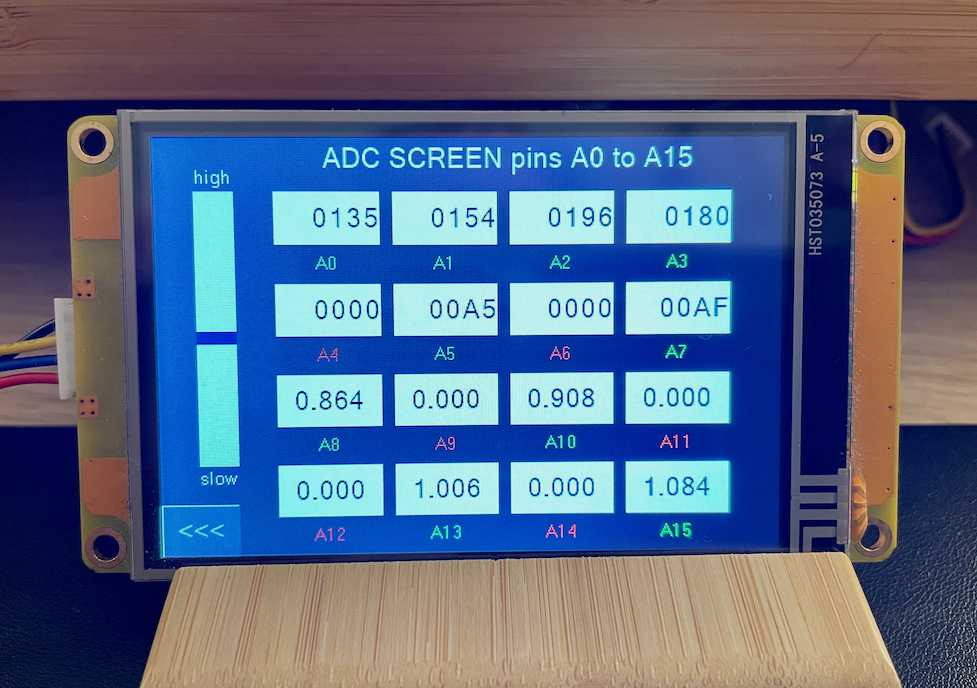

The HMI concept for embedded systems

So the idea suggests itself to give the LCD display its own processor, which takes care of rendering, buffering and transfer via DMA, which in a further step also queries the touch surface of the display, so that our MCU is relieved of all these tasks and only has to exchange a few essential data with the display. And this is how the Nextion HMI ecosystem was created.

How this works in detail and in which application areas it brings advantages in terms of ergonomics, design and economical production will be examined next Sunday!